A landing page succeeds when it does two things well. It clarifies value fast, and it nudges a qualified visitor to take the next step. A/B testing is how you make both happen with less guesswork and more signal. Done right, you do not chase trendy patterns or mimic a competitor’s hero section. You build a rhythm: hypothesize, ship, measure, learn, and fold the learning back into your web design services and digital marketing strategies. Over time, that rhythm compounds.

This guide comes from years of messy real-world tests across B2B SaaS, e-commerce, and lead gen. You will see what to test, how to size your experiments, when to stop, and how to keep your UI/UX design honest. You will also see where teams go sideways, like “testing” five variables at once or ignoring site speed while debating button colors. The craft is not glamorous, but it works.

What counts as a meaningful test

A landing page has a few pressure points that shape behavior: the promise at the top, the structure around it, the proof beneath it, and the friction at conversion. When we run tests, we stack them from high leverage to fine tuning. If you handle website development in-house or through an agency, align designers, writers, and engineers on this priority queue before you start.

The headline is usually the single highest-ROI variable. It sets the frame visitors use to interpret everything else. A clear, specific promise, often tied to a result or a customer type, can lift click-through or form completion by double digits. I have seen a B2B security client gain a 26 percent conversion lift by swapping a clever tagline for a blunt, outcome-based headline paired with a short subhead.

Right behind the headline sit your call to action, the form or checkout friction, and the first screen of visual hierarchy. Everything above the fold needs to cooperate: spacing, contrast, mobile-friendly websites behavior, and social proof. A landing page design that breathes on desktop but turns into a cramped tower on small screens will leak mobile conversions. Responsive web design is not just CSS breakpoints, it is intent mapping: on mobile, many users skim and decide fast. Make your first screen do more work, or let users jump to the point through site navigation best practices such as persistent CTAs and anchors.

Proof sells for you while you sleep. Reviews, customer logos, star ratings, certifications, and data points make claims feel safe. Proof works best when it is specific and placed right before friction. A short testimonial next to pricing, a guarantee near the primary CTA, or a trust badge near credit card fields can move the needle without yelling.

A simple, reliable testing loop

You do not need a complex web development framework to run A/B tests well, but you do need a steady loop. The loop starts with a question grounded in user experience research, analytics, or performance diagnostics. It ends with a clear decision: keep, kill, or iterate.

- Define the problem with data. Use analytics to spot drop-offs, heatmaps to see attention patterns, and session replays to catch failed clicks. If your bounce rate on paid traffic is fine but your add-to-cart rate lags, the issue might be form friction or weak proof, not traffic quality. Draft a specific hypothesis. Avoid vague goals like “make it more engaging.” Try “reducing the form from seven fields to four will increase submit rate by 15 to 25 percent without hurting lead quality.” Ship a clean variant. Change one variable or a tightly related cluster, not five unrelated elements. Keep HTML/CSS coding tidy so you do not introduce layout bugs that muddy results. Run to significance with guardrails. Size your sample to reach at least 90 to 95 percent confidence under expected uplift ranges. Use sequential testing or Bayesian methods if your traffic is modest. Beware peeking too early. Decide and document. Roll out winners through your content management systems workflow, note key learnings in your web design tools and software, and line up the next test.

That loop works because it respects a few constraints that reality imposes: limited traffic, uneven behavior across devices, seasonal swings, and the fact that no single change explains everything. You will rarely see a giant win that sticks unless you have analytics discipline and solid frontend development practices to keep variants performant.

Choosing what to test first

If you maintain a backlog the way a product team does, you will move faster and avoid vanity tests. Stack rank by expected impact and ease, then factor in technical risk. A few areas usually make the first cut.

Headlines and subheads. Use the language your customers use, not internal jargon. Pull phrases from sales calls, reviews, or surveys. If you run WordPress web design, create a modular hero section so the team can swap headlines without breaking layout.

Primary CTA label and placement. Clarity beats clever. If your CTA lives only at the bottom, add a top-level button and another near the key value prop. On mobile, test sticky CTAs that respect web accessibility standards. Make sure color contrast passes WCAG AA.

Form friction. If you cannot cut fields, chunk them and use smart defaults. Inline validation and human-friendly error messages do more than you think. For e-commerce web design, guest checkout frequently wins, and alternative payments can unlock incremental gains.

Visual hierarchy in web design. Spacing, type scale, and imagery direction matter. Test whether a product-centric hero outperforms a lifestyle image. Try a layout that surfaces pricing earlier, or one that introduces a comparison table for clarity. Proper visual hierarchy reduces cognitive load and directs attention where it counts.

Social proof and risk reversal. Test a testimonial near the CTA versus a carousel buried below. Test a clear guarantee statement next to the form. Replace generic “trusted by thousands” with the three most relevant client logos for that audience segment.

Page speed and stability. A slow or janky page punishes all variants. Website optimization is not a side quest, it is often the win. Measure LCP, CLS, and TBT, then reduce render-blocking scripts, compress imagery, and ship only the CSS you need. I have seen performance fixes alone lift conversion by 8 to 20 percent.

Segmentation that respects reality

The quickest way to muddy a test is to mix audiences with very different intent. Segment where it matters most: device type, traffic source, and sometimes geography. Mobile users may prefer tap-friendly CTAs and shorter copy. Paid search visitors arriving on a specific query might need product specs up front, while brand traffic may need less convincing and more direct paths.

If you work with content-heavy campaigns, build SEO-friendly websites that offer a separate, lean landing experience for campaigns while keeping organic pages content rich and indexable. Do not let your A/B testing tool generate URLs that search engines index by accident. Use canonical tags and noindex where appropriate, and lean on your CMS to manage variants in a way that avoids duplication.

For B2B funnels, firmographic segmentation can help, but be cautious. Dynamic “personalization” based on weak signals can misfire. A more reliable approach: write variants that speak to the top two customer profiles you actually close, then route traffic based on clear campaign intent rather than shaky identification.

Ensuring clean measurement

If your instrumentation is messy, your decisions will be too. Tie your test goal to a conversion that reflects business value, not just a click. For lead gen, that could be completed form plus a downstream quality metric such as MQL rate or cost per SQL. For e-commerce, track conversion rate and contribution margin, not only revenue per session.

Keep events consistent across variants. Use a tag manager to fire conversion events on server-verified outcomes when possible. If you rely on client-side events, audit them in website performance testing to ensure they are not blocked by ad blockers or broken by DOM changes. Align naming conventions across your analytics, ad platforms, and A/B testing software so your team can trace outcomes end to end.

The right sample size and test length

There is no magic number, but there is a logical process. Start with your baseline conversion rate, target uplift, and average daily sessions per variant. Plug those into a calculator and estimate the days required to hit 90 to 95 percent confidence. Add a buffer for weekends and seasonality. If your page receives 300 sessions a day and converts at 3 percent, detecting a 15 percent lift with good power might take two to three weeks. Smaller uplifts need more time.

Avoid cutting a test short when it looks good in the first few days. Early spikes usually regress. Run full business cycles if possible so you capture weekday and weekend behavior. Lock in traffic allocation and do not change other parts of the page mid-test. If you must fix a bug, annotate it and consider restarting if the fix could influence behavior.

Crafting variants without breaking the brand

A/B testing sometimes degenerates into whack-a-mole changes that chip away at branding and identity design. Guardrails help. Define non-negotiables such as logo usage, minimum contrast, type scale, and spacing. Designers can then explore bold headline angles, imagery approaches, and layout shifts within that system.

Consistency does not mean sameness. A variant can challenge your assumptions without looking off-brand. For instance, a clean, minimalist layout might get outperformed by a variant that introduces a comparison module and a pricing teaser earlier in the scroll. That does not betray brand, it clarifies value.

If your team maintains a design system in Figma and codes components in a modern web development framework, create test-friendly variants of hero, pricing, and testimonial components. Wireframing and prototyping these ahead of time shortens cycles and avoids last-minute hacks that slow pages down.

Mobile is not a scaled-down desktop

A common misstep is to design on a 1440-pixel monitor, then shoehorn it to mobile. The mobile-first version of a landing page should make a faster promise, surface the CTA earlier, and tighten copy. A sticky bottom CTA can make sense for sign-up or add-to-cart, but test its wording and screen coverage so it does not obscure key content or violate accessibility.

On small screens, autoplay video often hurts. Lightweight animation can help if it conveys function, but heavy motion or lottie loops tend to drag performance. If you include video, offer a tap-to-play with an informative poster frame and captions, and defer loading until requested. Touch targets must meet web accessibility standards for size and spacing, and focus states should be visible for keyboard users on larger devices. Accessibility is not only ethical, it protects conversion by avoiding accidental taps and confusion.

Copy that earns attention

Short copy is not inherently better. Clear copy is. Write to the prospect’s moment and job to be done. If they arrive from a search ad for “custom website design for salons,” lead with that outcome and the niche expertise you bring, not a generic claim about award-winning UI/UX design. Mirror the phrasing they used to find you.

Structure matters. One sharp headline, a supportive subhead, a tight list of outcomes, and a button that says exactly what happens next. If you must use a longer page, repeat the CTA after each meaningful block. For technical products, add a short explanation that demystifies the how without drifting into jargon. If you sell website development packages, include starting prices or ranges rather than hiding behind vague “contact us” CTAs. Transparent pricing often reduces unqualified leads and improves close rates.

Visuals that do real work

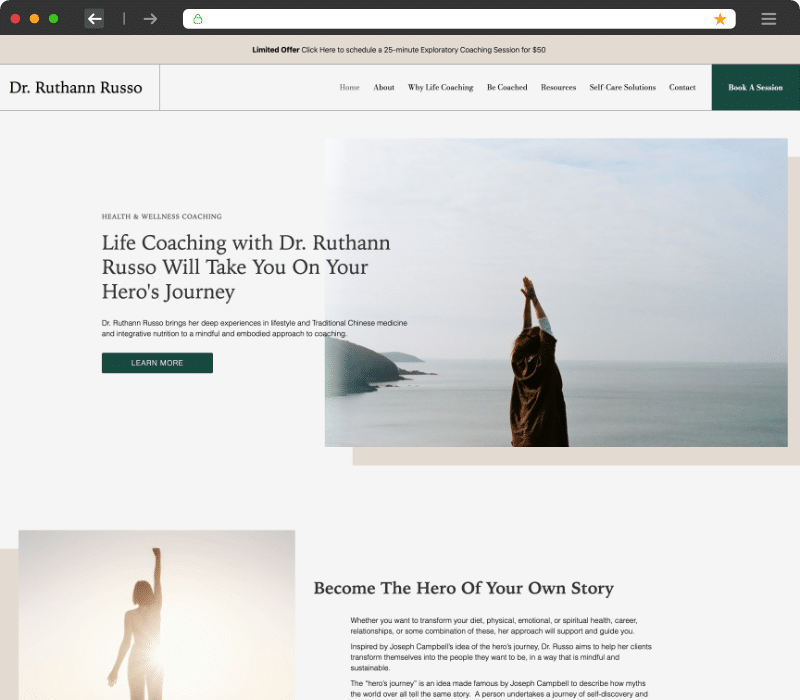

Images should reduce uncertainty, not just decorate the page. Show the product in use, the dashboard with real data, or the before-and-after of a redesign. For services like WordPress web design or responsive redesigns, a concise case study module can outperform a generic collage. Include a short outcome metric: “Page speed from 4.1s to 1.6s, organic conversions up 19 percent.” Those specifics build trust.

Graphic design choices influence comprehension. High-contrast buttons, generous white space, and a logical scan path guide the eye. Avoid center-aligned paragraphs for long text blocks. Use captions under images to carry key claims because people read captions more than body text. If you use icons, label them explicitly since icons alone are not self-explanatory.

Performance and reliability: the hidden multiplier

A fast, stable page lifts all variants. Audit your critical rendering path. Inline only the CSS needed for the first screen, defer the rest, and eliminate unused CSS from bloated frameworks. Load fonts responsibly to avoid layout shifts. Serve images in modern formats and right-size them per breakpoint. If you rely on multiple analytics and chat scripts, consider server-side tagging or consolidating vendors.

Treat website performance testing as part of every experiment. Measure Core Web Vitals for each variant. If Variant B wins on conversion but increases CLS or TTFB, you may trade short-term gains for long-term SEO losses. Staging your experiments in a controlled environment before going live catches regressions early. For teams shipping frequent tests, set a performance budget that every variant must respect.

When multivariate testing makes sense

Most teams do not have the traffic for reliable multivariate testing. If your page sees low to moderate traffic, stick to A/B or A/B/n with a small number of variants. Multivariate can help when you have large volumes and want to test interaction effects among a small set of elements such as headline, hero image, and CTA. Even then, constrain combinations to avoid splitting traffic too thin. The practical rule: fewer, cleaner tests beat many noisy ones.

Tying tests to business outcomes

A higher click rate on a top CTA is encouraging, but the north star should be revenue, qualified leads, or booked appointments. Connect the dots in your analytics pipeline. Track variant ID through to downstream conversions. If you run CRM-integrated funnels, pass variant metadata into the CRM to measure MQL to SQL conversion by variant. A CTA that inflates leads but weakens win rate is not a win.

This discipline influences design choices. For example, an aggressive scarcity timer might spike short-term sales but increase refunds. A smoother, trust-focused design with web designer transparent shipping and clear return policies might convert slightly fewer visitors but yield higher net revenue. Optimize for the right hill.

Case notes from the field

A mid-market SaaS company offered a free trial with a seven-field form. Sales insisted every field was essential. We ran a two-step form variant: email first, then the remaining fields after a progress marker and privacy note. Completion rose 22 percent, and downstream qualification held steady. The key was not removing fields, it was managing perceived effort and risk.

An online retailer with strong brand traffic saw declining mobile conversion. Analysis showed a slow hero video and a carousel that hid key products. We removed the auto-playing video on mobile, replaced the carousel with a static grid above the fold, and added a sticky, color-contrast “Add to bag” button on product pages. Mobile conversion increased 14 percent. The win came from performance and visual hierarchy, not a new color palette.

A boutique agency selling custom website design struggled on paid search. The landing page touted awards and a generic promise. We built two variants. One led with niche expertise by industry, each with a compact portfolio slice and a starting price range. The other used a diagnostic angle with a “Get your 5-minute audit” lead magnet. The niche variant lifted qualified leads by 31 percent, while the audit attracted volume but weaker fit. The takeaway: align message with buyer intent before experimenting with hooks.

Collaboration and workflow

A/B testing lives at the intersection of copy, design, and code. Smooth handoffs matter. Maintain a shared backlog in your project tool with hypotheses, expected impact, and status. Designers provide wireframes or comps for variants. Developers implement within the component system and ensure accessibility and performance. Marketers define success metrics, configure the experiment, and monitor quality.

If your stack uses modern web development frameworks such as Next.js or Nuxt, consider server-side variant rendering to improve performance and reduce flicker. Use feature flags to control rollout and kill switches. For teams on popular content management systems, set up reusable landing templates with slot-based regions so non-technical marketers can compose variants without breaking layout.

Avoiding common pitfalls

Peeking and declaring victory too soon is the classic mistake. The second is testing trivial details while bigger issues fester. If load time is 4 seconds on mobile, your button shade does not matter. Another trap is mixing major traffic sources in one test without segmentation, which hides impact.

Beware inconsistent attribution. If Google Ads and your experiment platform disagree on conversion counts, investigate tagging, deduplication, and cookie consent flows. Map out your consent UX so it does not block tracking on one variant and not the other. And always sanity-check extremes. If a variant shows a 60 percent lift in a day, it is probably misfiring instrumentation, not genius design.

The role of trends and taste

Web design trends can inspire but should not dictate your tests. Glassmorphism, giant typography, or ultra-minimal layouts can work or flop depending on audience and context. Treat trends as hypotheses. Does the large-type, single-column layout improve readability metrics and time to value? Does a high-contrast, editorial look enhance perceived authority for your market? Test with purpose, not for aesthetics alone.

Taste still matters. Experienced designers make choices that set up better tests. A thoughtful user interface design reduces cognitive load, which improves the odds that any value-focused copy will land. Testing is not an excuse to abdicate taste, it is a way to refine it with evidence.

Building a durable testing culture

A culture forms when the team values evidence over ego, and when learning is shared. Keep a simple test log that captures hypothesis, screenshots, key metrics, runtime, and decision. Review it monthly. Patterns will emerge: your audience might prefer concrete ROI claims, or they may respond to comparison tables more than long narrative. Your developers will see recurring performance hotspots, your writers will develop a voice that converts, and your designers will hone patterns that balance beauty with clarity.

Run post-mortems on big swings that lost. Those often teach more than the winners. Share screenshots with notes so future teammates avoid repeating the same dead ends. If you work across several landing pages, roll improvements laterally. A testimonial layout that wins on one page often helps others, especially if your brand sells related services like SEO-friendly websites, website redesign, or e-commerce design.

A brief checklist to keep handy

- Define a clear hypothesis tied to a business metric, not vanity clicks. Fix performance and accessibility before debating cosmetic tweaks. Segment by device and traffic source when behavior diverges. Run long enough to reach significance across a full business cycle. Document learnings and roll out winners within your design system.

Bringing it together

A/B testing is craft work. The tools matter less than the habits: careful observation, honest measurement, and steady iteration. Pair that with disciplined UI/UX design, solid HTML/CSS coding, and pragmatic website optimization. Give mobile the first-class attention it deserves. Respect accessibility. Let proof speak where hype used to. If you keep that rhythm, your landing pages will grow clearer, faster, and more persuasive, and your conversion rate optimization will move from sporadic wins to repeatable improvements.

For teams offering or using web design services, the payoff runs deeper than a single uplift number. You build components that are easier to maintain, content that maps to real intent, and a decision-making process that scales as campaigns multiply. Whether you are shipping a WordPress web design for a local retailer or a custom landing experience for a high-volume SaaS funnel, the same loop applies. Fewer guesses, better bets, and pages that earn their keep.

Radiant Elephant 35 State Street Northampton, MA 01060 +14132995300